iOS 26 and Liquid Glass feel like one big cover-up operation. Here’s why

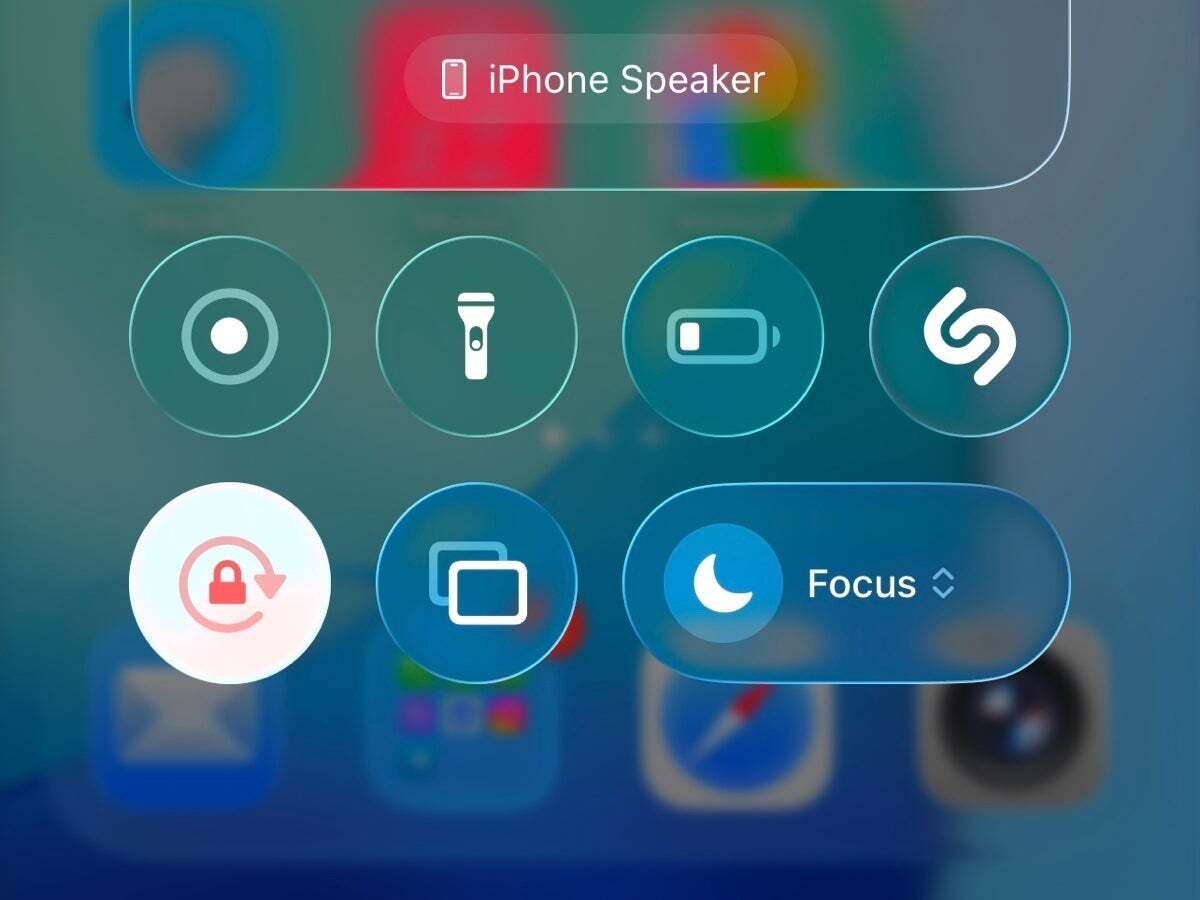

The new Liquid Glass look is one of the biggest visual overhauls in iOS

This is all fine and dandy, but it raised a couple of questions in my mind immediately. Do we NEED a translucent interface that behaves like real glass? And what does that even mean? That if you swipe or tap too fast or hard, it would break?

The second thing is right there in the text itself. Liquid Glass takes advantage of the hardware processing power of Apple’s silicon. I can’t help myself but wonder, don’t we have better and more useful ways to utilize hardware than to make things look like “glass in the real world”?

And finally, what are the chances Apple is throwing sand in our eyes in order to deflect our attention away from much more important stuff? Such as the fact that Siri is nowhere (mentioned just two times during the official event), and that Apple Intelligence still feels like a bad copy of what every other LLM has been doing for a long time now.

But first – let’s talk about Liquid Glass.

Form over function – people like pretty things

Beauty is in the eye of the beholder, but also in his pre historic brain | Image by stux

There are a lot of reasons why we love polished and good-looking things, smartphone interfaces included. Some of these things are rooted deeply in our brains from prehistoric times, while others we’ve learnt to value a bit later in our evolutionary journey.

Evolutionary basis of aesthetics

Our ability to recognize symmetry goes way back to ancient prehistoric times, and it’s tied to our survival mechanisms.

Not only in faces, but in everything around us – cars, furniture, the shape of your house, smartphones, clothes – the list goes on and on.

It’s a well-known phenomenon, and even though we’re trying to escape it and appraise things for other qualities, it’s so visceral that most of the time it’s beyond our conscious efforts.

Neuroscience and psychology of aesthetics

Dopamine – such a simple molecule but so powerful!

How does this work? Basically, without getting into boring scientific details, visually pleasing things activate a circuit in our brain tied to the dopamine reward system.

Put simply, you feel good when you see pretty things, and you also seek them actively.

This leads to some very interesting effects on our ability to be objective, and one of these is called “the aesthetic-usability effect.”

The aesthetic-usability effect

It does look good but is it actually functional?

The aesthetic-usability effect refers to users’ tendency to perceive attractive products as more usable. People tend to believe that things that look better will work better — even if they aren’t actually more effective or efficient.

This lies at the core of many interface design decisions, including iOS 26 and Liquid Glass. This effect has also been used for decades – if anyone has even used Linux versus Windows or macOS, they know how powerful the first one can be and, at the same time, how most people prefer the better-looking OS. The decision happens almost instantly.

iOS 26 – Liquid Glass or smoke and mirrors?

Siri’s still in kindergarten compared to other smart assistants

Back to the topic at hand. So, people like pretty things, and Apple decided to make iOS 26 pretty with Liquid Glass. What lies behind this decision?

Probably the fact that the smarter Siri Apple has promised us is still somewhere in school. In contrast, other smart assistants and LLMs can now write scripts, generate videos and podcasts, create movies from still frames, organize your emails, and be much more useful in general with multimodal input and cloud access.

Apple Intelligence has been a major talking point ever since its official announcement last year, but it continues to lag behind the competition.

Apple made a big deal of adding Live Translate to iOS 26, but this feature has been on board OneUI since January 2024.

The same goes for Visual intelligence. The ability to contextually search for an object has been part of the Android world for more than a year now, called ‘Circle to Search’.

Is Apple playing catch-up with iOS?

All the supposedly new features in iOS 26

I believe the answer is “yes.” However, there are some positives to be taken from the latest iOS 26 announcement.

That’s a bold move and probably required more work than we imagined.

But the truth of the matter is that Liquid Glass seems to me as a big cover up for the lack of new and original features. iOS 26 is playing the catch-up game and it seems this will continue to be the case for some time.